commit

0bde0149e6

11 changed files with 239 additions and 59 deletions

21

Dockerfile

21

Dockerfile

|

|

@ -1,7 +1,7 @@

|

|||

FROM alpine

|

||||

|

||||

LABEL maintainer "Alexey Nizhegolenko <ratibor78@gmail.com>"

|

||||

LABEL description "Geostat app"

|

||||

LABEL description "Geostat application"

|

||||

|

||||

|

||||

# Copy the requirements file

|

||||

|

|

@ -9,21 +9,22 @@ COPY requirements.txt /tmp/requirements.txt

|

|||

|

||||

# Install all needed packages

|

||||

RUN apk add --no-cache \

|

||||

python2 \

|

||||

python3 \

|

||||

bash && \

|

||||

python2 -m ensurepip && \

|

||||

python3 -m ensurepip && \

|

||||

rm -r /usr/lib/python*/ensurepip && \

|

||||

pip2 install --upgrade pip setuptools && \

|

||||

pip2 install -r /tmp/requirements.txt && \

|

||||

pip3 install --upgrade pip setuptools && \

|

||||

pip3 install -r /tmp/requirements.txt && \

|

||||

rm -r /root/.cache

|

||||

|

||||

# Download Geolite base

|

||||

RUN wget https://geolite.maxmind.com/download/geoip/database/GeoLite2-City.tar.gz && \

|

||||

mkdir tmpgeo && tar -xvf GeoLite2-City.tar.gz -C ./tmpgeo && \

|

||||

cp /tmpgeo/*/GeoLite2-City.mmdb / && rm -rf ./tmpgeo

|

||||

# Copy the Geolite base

|

||||

ADD GeoLite2-City.mmdb /

|

||||

|

||||

#Copy the geohash lib locally

|

||||

ADD geohash /

|

||||

|

||||

# Copy the application file

|

||||

ADD geoparser.py /

|

||||

|

||||

# Run our app

|

||||

CMD [ "python", "./geoparser.py"]

|

||||

CMD [ "python3", "./geoparser.py"]

|

||||

|

|

|

|||

BIN

GeoLite2-City.mmdb

Normal file

BIN

GeoLite2-City.mmdb

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 63 MiB |

66

README.md

66

README.md

|

|

@ -1,15 +1,26 @@

|

|||

# GeoStat

|

||||

### Version 1.0

|

||||

### Version 2.0

|

||||

|

||||

|

||||

|

||||

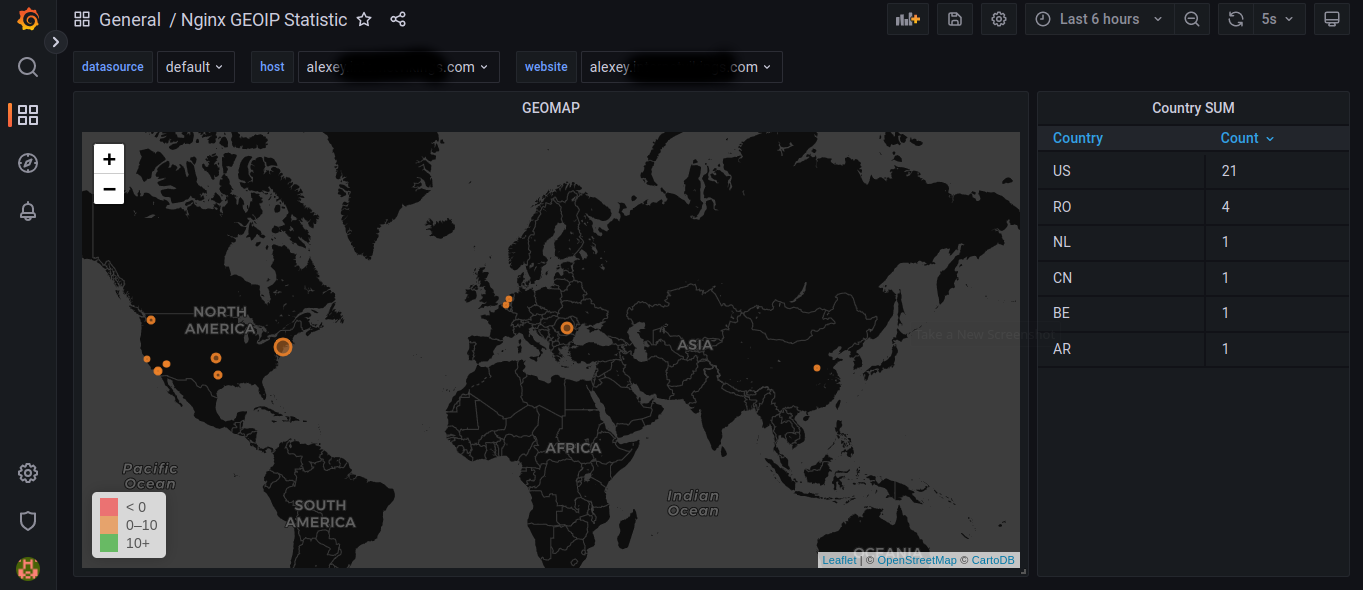

GeoStat is a Python script for parsing Nginx logs files and getting GEO data from incoming IP's in it. This script converts parsed data into JSON format and sends it to the InfluxDB database so you can use it to build some nice Grafana dashboards for example. It runs as service by SystemD and parses log in tailf command style. Also, it can be run as a Docker container for the easy start.

|

||||

GeoStat it's a Python-based script for parsing Nginx and Apache log files and getting GEO data from incoming IPs from it. This script converts parsed data into JSON format and sends it to the InfluxDB database, so you can use it for building nice Grafana dashboards for example. The application runs as SystemD service and parses log files in "tailf" style. Also, you can run it as a Docker container if you wish.

|

||||

|

||||

# New in version 2.0

|

||||

- The application was rewritten with python3

|

||||

- Was added few additional tags to JSON output, the country name, and the city name.

|

||||

- Was fixed the few bugs with the geohash lib and with the install.sh script.

|

||||

- Also was added the simple logging feature, now you can catch this application log in the Syslog file.

|

||||

- The Dockerfile was recreated with the python3 support also.

|

||||

- Was done all needed tests, and all things looks OK :)

|

||||

|

||||

# Main Features:

|

||||

|

||||

- Parsing incoming IPS from web server log and convert them into GEO metrics for the InfluxDB.

|

||||

- Used standard python libs for maximum compatibility.

|

||||

- Having an external **settings.ini** for comfortable changing parameters.

|

||||

- Have a Docker file for quick building Docker image.

|

||||

- Parsing incoming IPs from web server log and converts them into GEO metrics for the InfluxDB.

|

||||

- Using standard python libs for maximum easy use.

|

||||

- Having an external **settings.ini** file for comfortable changing parameters.

|

||||

- Having a Dockerfile inside for quick building Docker image.

|

||||

- Contains an install.sh script that will do the installation process easy.

|

||||

- Runs as a SystemD service

|

||||

|

||||

JSON format that script sends to InfluxDB looks like:

|

||||

```

|

||||

|

|

@ -23,33 +34,46 @@ JSON format that script sends to InfluxDB looks like:

|

|||

'host': 'cube'

|

||||

'geohash': 'u8mb76rpv69r',

|

||||

'country_code': 'UA'

|

||||

'country_name': 'Ukraine'

|

||||

'city_name': 'Odessa'

|

||||

}

|

||||

}

|

||||

]

|

||||

```

|

||||

As you can see there are three tags fields, so you can build dashboards using geohash (with a point on the map) or country code, or build dashboards with variables based on the host name tag. A count for any metric equals 1. This script doesn't parse log file from the beginning but parses it line by line after running. So you can build dashboards using **count** of geohashes or country codes after some time will pass.

|

||||

As you can see there are five fields in the JSON output, so you can build dashboards using geo-hash (with a point on the map) or country code, or with the country name and city name. Build dashboards with variables based on the hostname tag or combine them all. A count for any metric equals 1, so it'll be easy summarising. This script doesn't parse the log file from the beginning but parses it line by line after starting. So you can build dashboards using **count** of data after some time will pass.

|

||||

|

||||

You can find the example Grafana dashboard in **geomap.json** file or from grafana.com: https://grafana.com/dashboards/8342

|

||||

You can find the example of the Grafana dashboard in **geomap.json** file or take it from the grafana.com: https://grafana.com/dashboards/8342

|

||||

|

||||

### Tech

|

||||

|

||||

GeoStat uses a number of open source libs to work properly:

|

||||

|

||||

* [Geohash](https://github.com/vinsci/geohash) - Python module that provides functions for decoding and encoding Geohashes.

|

||||

* [Geohash](https://github.com/vinsci/geohash) - Python module that provides functions for decoding and encoding Geohashes. Now it was added as local lib, and no longer need to be installed with pip.

|

||||

* [InfluxDB-Python](https://github.com/influxdata/influxdb-python) - Python client for InfluxDB.

|

||||

|

||||

## Important

|

||||

The GeoLite2-City database no longer available for the simple downloading, now you need register on the maxmind.com website first.

|

||||

After you'll get an account on the maxmind.com you can find the needed file by the link

|

||||

|

||||

(https://www.maxmind.com/en/accounts/YOURACCOUNTID/geoip/downloads)

|

||||

|

||||

Please don't forget to unzip and put the GeoLite2-City.mmdb file in the same directory with the geoparse.py script, or you can put it anywhere and then fix the path in the settings.ini.

|

||||

|

||||

# Installation

|

||||

|

||||

You can install it in a few ways:

|

||||

|

||||

Using install.sh script:

|

||||

1) Clone the repository.

|

||||

2) CD into dir and run **install.sh**, it will ask you to set a properly settings.ini parameters, like Nginx **access.log** path, and InfluxDB settings.

|

||||

3) After the script will finish you only need to start SystemD service with **systemctl start geostat.service**.

|

||||

2) CD into the directory and then run **install.sh**, it will asks you to set properly settings.ini parameters, like Nginx/Apache **access.log** path, and InfluxDB settings.

|

||||

3) After the script will finish the application installationion you need copy the GeoLite2-City.mmdb file into the application local directory and start the SystemD service with **systemctl start geostat.service**.

|

||||

|

||||

Manually:

|

||||

1) Clone the repository, create an environment and install requirements

|

||||

```sh

|

||||

$ cd geostat

|

||||

$ virtualenv venv && source venv/bin/activate

|

||||

$ pip install -r requirements.txt

|

||||

$ python3 -m venv venv && source venv/bin/activate

|

||||

$ pip3 install -r requirements.txt

|

||||

```

|

||||

2) Modify **settings.ini** & **geostat.service** files and copy service to systemd.

|

||||

```sh

|

||||

|

|

@ -59,11 +83,9 @@ $ cp geostat.service.template geostat.service

|

|||

$ vi geostat.service

|

||||

$ cp geostat.service /lib/systemd/system/

|

||||

```

|

||||

3) Download latest GeoLite2-City.mmdb from MaxMind

|

||||

3) Register and download latest GeoLite2-City.mmdb file from maxmind.com

|

||||

```sh

|

||||

$ wget https://geolite.maxmind.com/download/geoip/database/GeoLite2-City.tar.gz

|

||||

$ tar -xvzf GeoLite2-City.tar.gz

|

||||

$ cp ./GeoLite2-City_some-date/GeoLite2-City.mmdb ./

|

||||

$ cp ./any_path/GeoLite2-City.mmdb ./

|

||||

```

|

||||

4) Then enable and start service

|

||||

```sh

|

||||

|

|

@ -71,17 +93,21 @@ $ systemctl enable geostat.service

|

|||

$ systemctl start geostat.service

|

||||

```

|

||||

Using Docker image:

|

||||

1) Build the docker image from the Dockerfile inside geostat repository directory run:

|

||||

1) Build the docker image using the Dockerfile inside geostat repository directory:

|

||||

```

|

||||

$ docker build -t some-name/geostat .

|

||||

```

|

||||

2) After Docker image will be created you can run it using properly edited **settings.ini** file and you also,

|

||||

2) Register and download latest GeoLite2-City.mmdb file from maxmind.com

|

||||

```sh

|

||||

$ cp ./any_path/GeoLite2-City.mmdb ./

|

||||

```

|

||||

3) After Docker image will be created you can run it using properly edited **settings.ini** file and you also,

|

||||

need to forward the Nginx/Apache logfile inside the container:

|

||||

```

|

||||

docker run -d --name geostat -v /opt/geostat/settings.ini:/settings.ini -v /var/log/nginx_access.log:/var/log/nginx_access.log some-name/geostat

|

||||

```

|

||||

|

||||

After the first metrics will go to the InfluxDB you can create nice Grafana dashboards.

|

||||

After the first metrics will reach the InfluxDB you can create nice dashboards in Grafana.

|

||||

|

||||

Have fun !

|

||||

|

||||

|

|

|

|||

21

geohash/__init__.py

Normal file

21

geohash/__init__.py

Normal file

|

|

@ -0,0 +1,21 @@

|

|||

"""

|

||||

Copyright (C) 2008 Leonard Norrgard <leonard.norrgard@gmail.com>

|

||||

Copyright (C) 2015 Leonard Norrgard <leonard.norrgard@gmail.com>

|

||||

|

||||

This file is part of Geohash.

|

||||

|

||||

Geohash is free software: you can redistribute it and/or modify it

|

||||

under the terms of the GNU Affero General Public License as published

|

||||

by the Free Software Foundation, either version 3 of the License, or

|

||||

(at your option) any later version.

|

||||

|

||||

Geohash is distributed in the hope that it will be useful, but WITHOUT

|

||||

ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or

|

||||

FITNESS FOR A PARTICULAR PURPOSE. See the GNU Affero General Public

|

||||

License for more details.

|

||||

|

||||

You should have received a copy of the GNU Affero General Public

|

||||

License along with Geohash. If not, see

|

||||

<http://www.gnu.org/licenses/>.

|

||||

"""

|

||||

from .geohash import decode_exactly, decode, encode

|

||||

109

geohash/geohash.py

Normal file

109

geohash/geohash.py

Normal file

|

|

@ -0,0 +1,109 @@

|

|||

"""

|

||||

Copyright (C) 2008 Leonard Norrgard <leonard.norrgard@gmail.com>

|

||||

Copyright (C) 2015 Leonard Norrgard <leonard.norrgard@gmail.com>

|

||||

|

||||

This file is part of Geohash.

|

||||

|

||||

Geohash is free software: you can redistribute it and/or modify it

|

||||

under the terms of the GNU Affero General Public License as published

|

||||

by the Free Software Foundation, either version 3 of the License, or

|

||||

(at your option) any later version.

|

||||

|

||||

Geohash is distributed in the hope that it will be useful, but WITHOUT

|

||||

ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or

|

||||

FITNESS FOR A PARTICULAR PURPOSE. See the GNU Affero General Public

|

||||

License for more details.

|

||||

|

||||

You should have received a copy of the GNU Affero General Public

|

||||

License along with Geohash. If not, see

|

||||

<http://www.gnu.org/licenses/>.

|

||||

"""

|

||||

from math import log10

|

||||

|

||||

# Note: the alphabet in geohash differs from the common base32

|

||||

# alphabet described in IETF's RFC 4648

|

||||

# (http://tools.ietf.org/html/rfc4648)

|

||||

__base32 = '0123456789bcdefghjkmnpqrstuvwxyz'

|

||||

__decodemap = { }

|

||||

for i in range(len(__base32)):

|

||||

__decodemap[__base32[i]] = i

|

||||

del i

|

||||

|

||||

def decode_exactly(geohash):

|

||||

"""

|

||||

Decode the geohash to its exact values, including the error

|

||||

margins of the result. Returns four float values: latitude,

|

||||

longitude, the plus/minus error for latitude (as a positive

|

||||

number) and the plus/minus error for longitude (as a positive

|

||||

number).

|

||||

"""

|

||||

lat_interval, lon_interval = (-90.0, 90.0), (-180.0, 180.0)

|

||||

lat_err, lon_err = 90.0, 180.0

|

||||

is_even = True

|

||||

for c in geohash:

|

||||

cd = __decodemap[c]

|

||||

for mask in [16, 8, 4, 2, 1]:

|

||||

if is_even: # adds longitude info

|

||||

lon_err /= 2

|

||||

if cd & mask:

|

||||

lon_interval = ((lon_interval[0]+lon_interval[1])/2, lon_interval[1])

|

||||

else:

|

||||

lon_interval = (lon_interval[0], (lon_interval[0]+lon_interval[1])/2)

|

||||

else: # adds latitude info

|

||||

lat_err /= 2

|

||||

if cd & mask:

|

||||

lat_interval = ((lat_interval[0]+lat_interval[1])/2, lat_interval[1])

|

||||

else:

|

||||

lat_interval = (lat_interval[0], (lat_interval[0]+lat_interval[1])/2)

|

||||

is_even = not is_even

|

||||

lat = (lat_interval[0] + lat_interval[1]) / 2

|

||||

lon = (lon_interval[0] + lon_interval[1]) / 2

|

||||

return lat, lon, lat_err, lon_err

|

||||

|

||||

def decode(geohash):

|

||||

"""

|

||||

Decode geohash, returning two strings with latitude and longitude

|

||||

containing only relevant digits and with trailing zeroes removed.

|

||||

"""

|

||||

lat, lon, lat_err, lon_err = decode_exactly(geohash)

|

||||

# Format to the number of decimals that are known

|

||||

lats = "%.*f" % (max(1, int(round(-log10(lat_err)))) - 1, lat)

|

||||

lons = "%.*f" % (max(1, int(round(-log10(lon_err)))) - 1, lon)

|

||||

if '.' in lats: lats = lats.rstrip('0')

|

||||

if '.' in lons: lons = lons.rstrip('0')

|

||||

return lats, lons

|

||||

|

||||

def encode(latitude, longitude, precision=12):

|

||||

"""

|

||||

Encode a position given in float arguments latitude, longitude to

|

||||

a geohash which will have the character count precision.

|

||||

"""

|

||||

lat_interval, lon_interval = (-90.0, 90.0), (-180.0, 180.0)

|

||||

geohash = []

|

||||

bits = [ 16, 8, 4, 2, 1 ]

|

||||

bit = 0

|

||||

ch = 0

|

||||

even = True

|

||||

while len(geohash) < precision:

|

||||

if even:

|

||||

mid = (lon_interval[0] + lon_interval[1]) / 2

|

||||

if longitude > mid:

|

||||

ch |= bits[bit]

|

||||

lon_interval = (mid, lon_interval[1])

|

||||

else:

|

||||

lon_interval = (lon_interval[0], mid)

|

||||

else:

|

||||

mid = (lat_interval[0] + lat_interval[1]) / 2

|

||||

if latitude > mid:

|

||||

ch |= bits[bit]

|

||||

lat_interval = (mid, lat_interval[1])

|

||||

else:

|

||||

lat_interval = (lat_interval[0], mid)

|

||||

even = not even

|

||||

if bit < 4:

|

||||

bit += 1

|

||||

else:

|

||||

geohash += __base32[ch]

|

||||

bit = 0

|

||||

ch = 0

|

||||

return ''.join(geohash)

|

||||

37

geoparser.py

37

geoparser.py

|

|

@ -1,4 +1,4 @@

|

|||

#! /usr/bin/env python

|

||||

#! /usr/bin/env python3

|

||||

|

||||

# Getting GEO information from Nginx access.log by IP's.

|

||||

# Alexey Nizhegolenko 2018

|

||||

|

|

@ -10,12 +10,28 @@ import os

|

|||

import re

|

||||

import sys

|

||||

import time

|

||||

import geohash

|

||||

import logging

|

||||

import logging.handlers

|

||||

import geoip2.database

|

||||

import Geohash

|

||||

import configparser

|

||||

from influxdb import InfluxDBClient

|

||||

from IPy import IP as ipadd

|

||||

|

||||

|

||||

class SyslogBOMFormatter(logging.Formatter):

|

||||

def format(self, record):

|

||||

result = super().format(record)

|

||||

return "ufeff" + result

|

||||

|

||||

|

||||

handler = logging.handlers.SysLogHandler('/dev/log')

|

||||

formatter = SyslogBOMFormatter(logging.BASIC_FORMAT)

|

||||

handler.setFormatter(formatter)

|

||||

root = logging.getLogger(__name__)

|

||||

root.setLevel(os.environ.get("LOGLEVEL", "INFO"))

|

||||

root.addHandler(handler)

|

||||

|

||||

def logparse(LOGPATH, INFLUXHOST, INFLUXPORT, INFLUXDBDB, INFLUXUSER, INFLUXUSERPASS, MEASUREMENT, GEOIPDB, INODE): # NOQA

|

||||

# Preparing variables and params

|

||||

IPS = {}

|

||||

|

|

@ -53,21 +69,26 @@ def logparse(LOGPATH, INFLUXHOST, INFLUXPORT, INFLUXDBDB, INFLUXUSER, INFLUXUSER

|

|||

m = re_IPV6.match(LINE)

|

||||

IP = m.group(1)

|

||||

|

||||

if ipadd(IP).iptype() == 'PUBLIC' and IP:

|

||||

if ipadd(IP).iptype() == 'PUBLIC' and IP:

|

||||

INFO = GI.city(IP)

|

||||

if INFO is not None:

|

||||

HASH = Geohash.encode(INFO.location.latitude, INFO.location.longitude) # NOQA

|

||||

HASH = geohash.encode(INFO.location.latitude, INFO.location.longitude) # NOQA

|

||||

COUNT['count'] = 1

|

||||

GEOHASH['geohash'] = HASH

|

||||

GEOHASH['host'] = HOSTNAME

|

||||

GEOHASH['country_code'] = INFO.country.iso_code

|

||||

GEOHASH['country_name'] = INFO.country.name

|

||||

GEOHASH['city_name'] = INFO.city.name

|

||||

IPS['tags'] = GEOHASH

|

||||

IPS['fields'] = COUNT

|

||||

IPS['measurement'] = MEASUREMENT

|

||||

METRICS.append(IPS)

|

||||

|

||||

# Sending json data to InfluxDB

|

||||

CLIENT.write_points(METRICS)

|

||||

try:

|

||||

CLIENT.write_points(METRICS)

|

||||

except Exception:

|

||||

logging.exception("Cannot establish connection with InfluxDB server: ") # NOQA

|

||||

|

||||

|

||||

def main():

|

||||

|

|

@ -94,11 +115,15 @@ def main():

|

|||

if os.path.exists(LOGPATH):

|

||||

logparse(LOGPATH, INFLUXHOST, INFLUXPORT, INFLUXDBDB, INFLUXUSER, INFLUXUSERPASS, MEASUREMENT, GEOIPDB, INODE) # NOQA

|

||||

else:

|

||||

print('File %s not found' % LOGPATH)

|

||||

logging.info('Nginx log file %s not found', LOGPATH)

|

||||

print('Nginx log file %s not found' % LOGPATH)

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

try:

|

||||

main()

|

||||

except Exception:

|

||||

logging.exception("Exception in main(): ")

|

||||

except KeyboardInterrupt:

|

||||

logging.exception("Exception KeyboardInterrupt: ")

|

||||

sys.exit(0)

|

||||

|

|

|

|||

BIN

geostat.png

BIN

geostat.png

Binary file not shown.

|

Before Width: | Height: | Size: 113 KiB After Width: | Height: | Size: 188 KiB |

|

|

@ -5,7 +5,7 @@ StartLimitIntervalSec=0

|

|||

|

||||

[Service]

|

||||

Type=simple

|

||||

ExecStart=$PWD/venv/bin/python geoparser.py

|

||||

ExecStart=$PWD/venv/bin/python3 geoparser.py

|

||||

User=root

|

||||

WorkingDirectory=$PWD

|

||||

Restart=always

|

||||

|

|

|

|||

33

install.sh

33

install.sh

|

|

@ -8,30 +8,21 @@

|

|||

WORKDIR=$(pwd)

|

||||

|

||||

echo ""

|

||||

echo "Downloading latest GeoLiteCity.dat from MaxMind"

|

||||

echo "Creating virtual ENV and install all needed requirements..."

|

||||

sleep 1

|

||||

wget https://geolite.maxmind.com/download/geoip/database/GeoLite2-City.tar.gz

|

||||

mkdir tmpgeo

|

||||

tar -xvf GeoLite2-City.tar.gz -C ./tmpgeo && cd ./tmpgeo/*/.

|

||||

cp ./GeoLite2-City.mmdb $WORKDIR

|

||||

cd $WORKDIR

|

||||

python3 -m venv venv && source venv/bin/activate

|

||||

|

||||

pip3 install -r requirements.txt && deactivate

|

||||

|

||||

echo ""

|

||||

echo "Creating virtual ENV and installing requirements..."

|

||||

sleep 1

|

||||

virtualenv venv && source venv/bin/activate

|

||||

|

||||

pip install -r requirements.txt && deactivate

|

||||

|

||||

echo ""

|

||||

echo "Please edit settings.ini file and set right parameters..."

|

||||

echo "Please edit settings.ini file and set the needed parameters..."

|

||||

sleep 1

|

||||

cp settings.ini.back settings.ini

|

||||

|

||||

"${VISUAL:-"${EDITOR:-vi}"}" "settings.ini"

|

||||

|

||||

echo ""

|

||||

echo "Installing SystemD service..."

|

||||

echo "Installing GeoStat SystemD service..."

|

||||

sleep 1

|

||||

while read line

|

||||

do

|

||||

|

|

@ -41,6 +32,14 @@ done < "./geostat.service.template" > /lib/systemd/system/geostat.service

|

|||

systemctl enable geostat.service

|

||||

|

||||

echo ""

|

||||

echo "All done, now you can start getting GEO data from your log"

|

||||

echo "run 'systemctl start geostat.service' for this"

|

||||

echo "Last step, you need to register and download the lates GeoLite2 City mmdb file from the maxmind.com website"

|

||||

echo "After you get an account on the maxmind.com you can find the needed file by the link below"

|

||||

echo "https://www.maxmind.com/en/accounts/YOURACCOUNTID/geoip/downloads"

|

||||

echo "Please don't forget to unzip and put the GeoLite2-City.mmdb file in the same directory with the geoparse.py"

|

||||

echo "script, or you can put it enywhere and then fix the path in the settings.ini"

|

||||

|

||||

echo ""

|

||||

echo "Good, all was done and you can start getting GEO data from your Nginx/Apache log file now"

|

||||

echo "Please run 'systemctl start geostat.service' for starting the GeoStat script"

|

||||

echo "You can find the GeoStat application logs in the syslog file if you need"

|

||||

echo "Good Luck !"

|

||||

|

|

|

|||

|

|

@ -1,5 +1,4 @@

|

|||

configparser==3.5.0

|

||||

influxdb==5.2.0

|

||||

Geohash==1.0

|

||||

geoip2==2.9.0

|

||||

IPy==1.00

|

||||

|

|

|

|||

|

|

@ -8,15 +8,15 @@ geoipdb = ./GeoLite2-City.mmdb

|

|||

|

||||

[INFLUXDB]

|

||||

# Database URL

|

||||

host = ip_address

|

||||

host = INFLUXDB_SERVER_IP

|

||||

port = 8086

|

||||

|

||||

#Database name

|

||||

database = db_name

|

||||

database = INFLUXDB_DATABASE_NAME

|

||||

|

||||

# HTTP Auth

|

||||

username = "username"

|

||||

password = "password"

|

||||

username = INFLUXDB_USER_NAME

|

||||

password = INFLUXDB_USER_PASSWORD

|

||||

|

||||

# Measurement name

|

||||

measurement = geodata

|

||||

|

|

|

|||

Loading…

Add table

Reference in a new issue